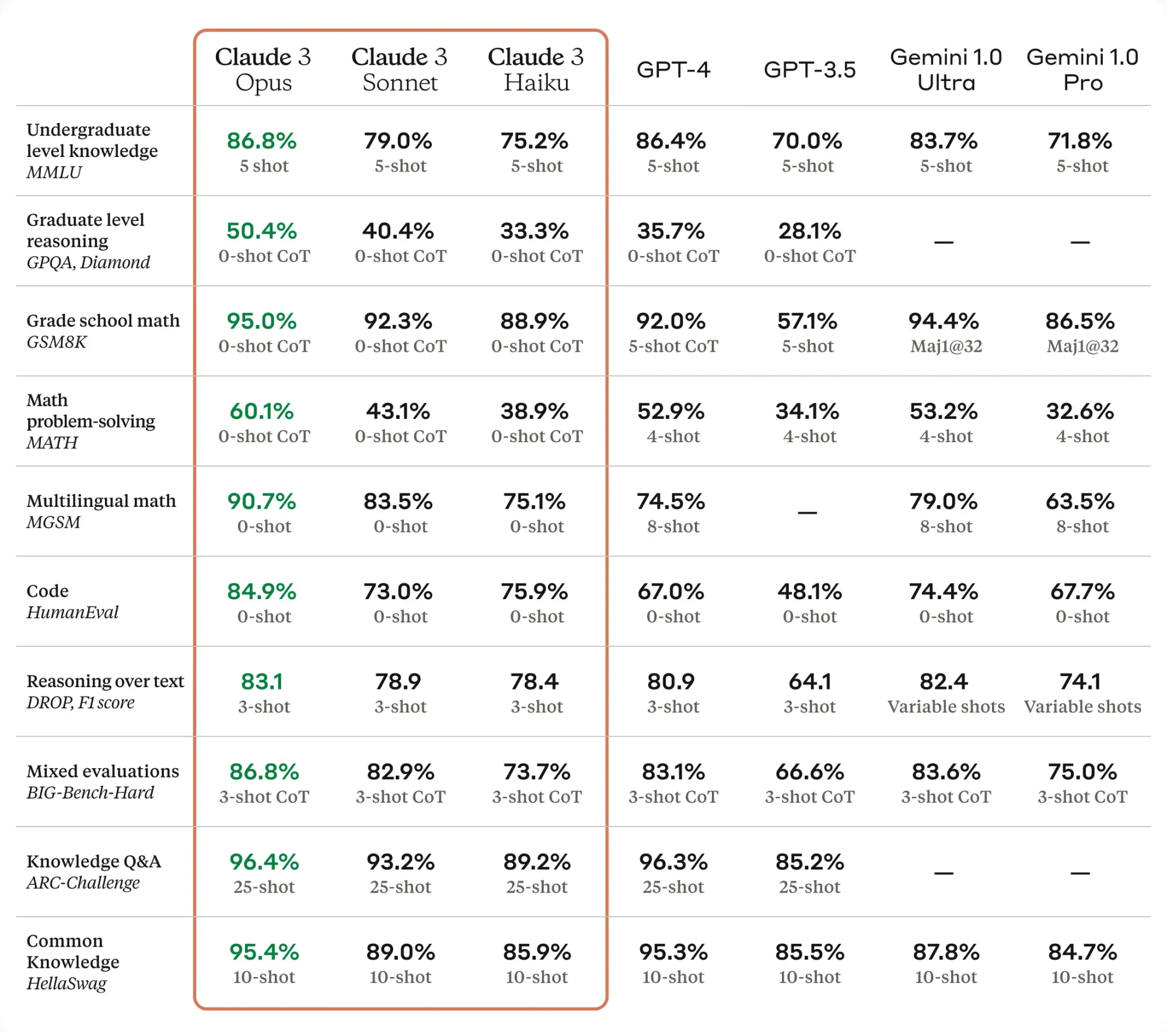

Anthropic recently released their new Claude 3 family of models, which has show to outperform both GPT-4 & Gemini Ultra on a number of LLM benchmarks.

In this guide, let's look at how to get started with the Claude API and start experimenting with these new models. First, let's look at what each model is capable of.

Claude 3 Models

As highlighted in the Claude documentation, here are a few key highlights of each new model:

- Claude 3 Opus: The most powerful model that outperforms GPT-4 & Gemini on all major benchmarks, at least at the time of writing.

- Claude 3 Sonnet: A balanced model between intelligence and speed and a good choice for enterprise workloads or scaled AI deployments.

- Claude 3 Haiku: The fastest model that's designed for near-instant responses.

Accessing the Claude API

Alright now let's look at how we can access these models through the API and Workbench. First off, you can sign up for an API key here and retrieve your API key👇

Prompting with the Claude Workbench

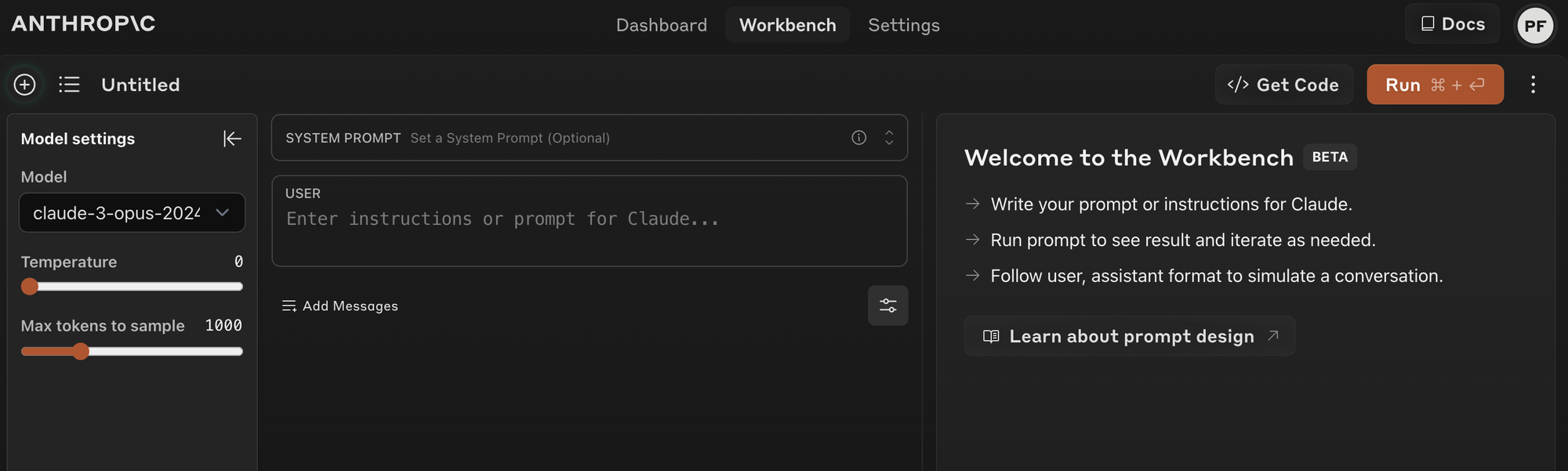

Before we start writing code, you can also start prompting and experimenting with Claude their Workbench tool, which allows you to experiment with the API directly in your browser.

The Workbench allows you to create and test prompts within your Console account. You can enter your prompt into the "Human" dialogue box and click "Run" to test Claude's output

This is exactly like the OpenAI Playground, where you can adjust various LLM settings and retrieve the code for your prompts:

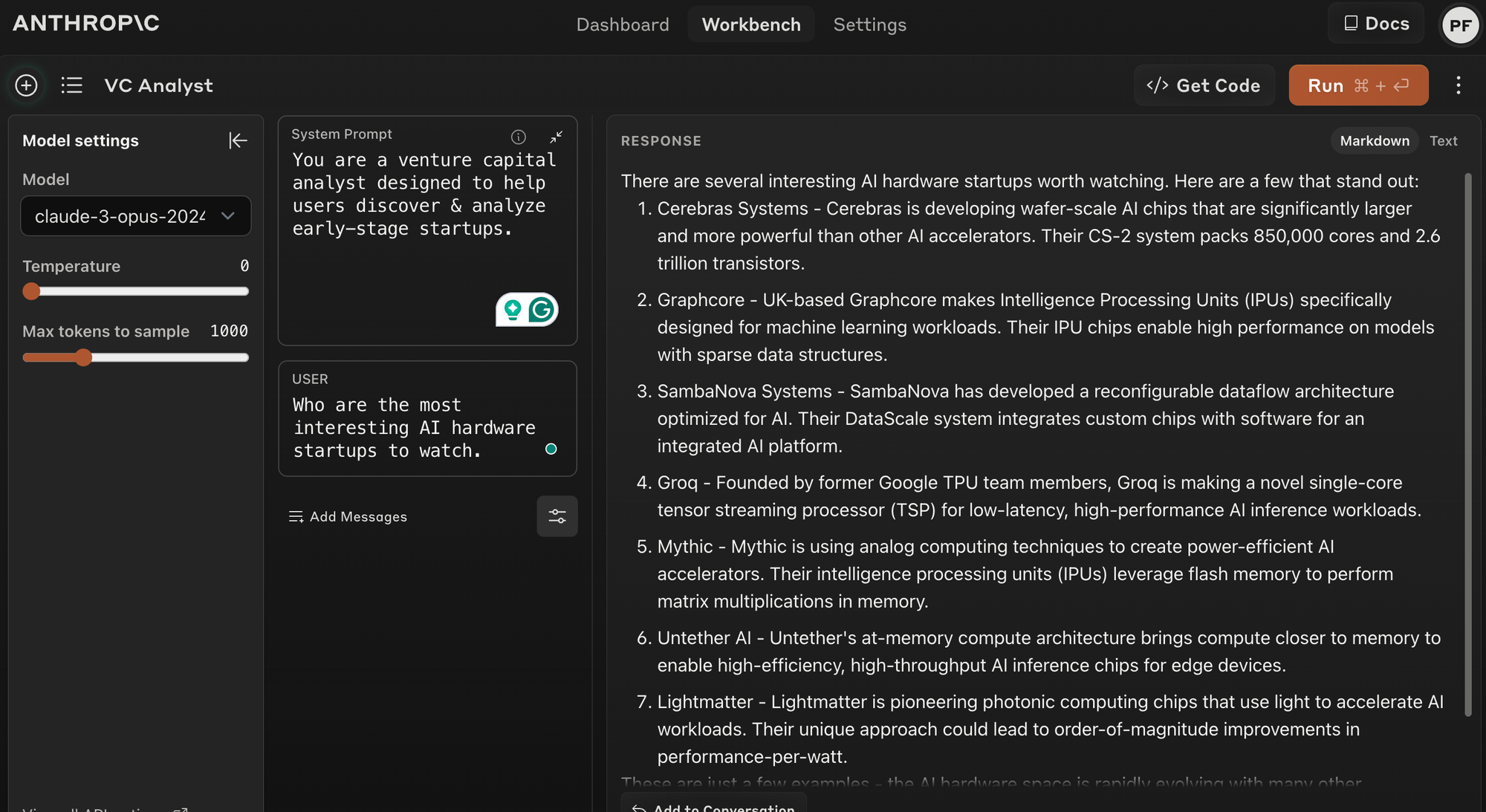

Let's test it out with the following system prompt:

You are a venture capital analyst designed to help users discover & analyze early-stage startups.

Now let's add a user prompt:

Who are the most interesting AI hardware startups to watch?

Now we can easily get the code for this API call with the "Get code" button:

import anthropic

client = anthropic.Anthropic(

# defaults to os.environ.get("ANTHROPIC_API_KEY")

api_key="my_api_key",

)

message = client.messages.create(

model="claude-3-opus-20240229",

max_tokens=1000,

temperature=0,

system="You are a venture capital analyst designed to help users discover & analyze early-stage startups.",

messages=[

{

"role": "user",

"content": [

{

"type": "text",

"text": "Who are the most interesting AI hardware startups to watch."

}

]

}

]

)

print(message.content)Claude 3 API Parameters

As you can see from the code above, making an API request is very similar to the Chat Completions API from OpenAI, so it's quite a seamless transition.

Specifically, in the client.messsages.create() request we can see we can set several LLM parameters like

model: Claude 3 opus in this casemax_tokens: Controls the length of the model's output in tokens.temperature: Controls randomness in the models responses, with higher values leading to more varied and creative outputs, and lower values resulting in more deterministic and predictable replies.system: System messages give you a way to provide context, specific instructions, or guidelines on how the model should act or respond before the user asks a question. As the docs highlight, system prompts can include:- Task instructions and objectives

- Personality traits, roles, and tone guidelines

- Contextual information for the user input

- Creativity constraints and style guidance

- External knowledge, data, or reference material

- Rules, guidelines, and guardrails

- Output verification standards and requirements

messages: Themessagesparameter is an array of dictionaries where each represents a single message in the conversation.- Role: indicates who is sending the message, in this case,

user. - Content: Contains the actual content of the message. It's an array that can potentially hold multiple types of content (e.g., text, images). In this case, there's only one content item, which is of type "text".

- Type: Specifies the content type, i.e. "text" or "images"

- Text: The actual user input text.

- Role: indicates who is sending the message, in this case,

As you can see, even though the API is very straightforward to use, it allows for rich, multi-modal conversations between users and the model, where you can specify various LLM settings, system messages, and so on.

Multi-Turn Conversations with Claude

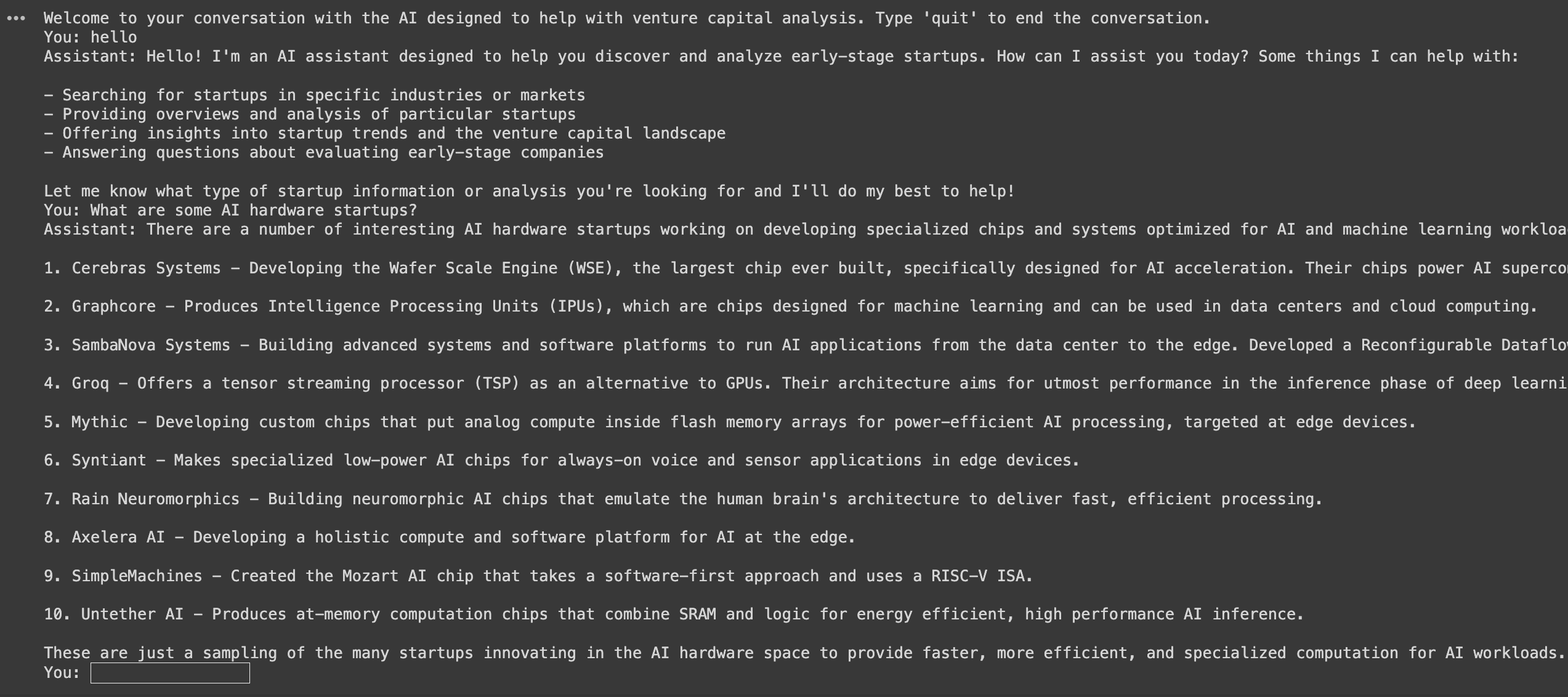

Next, let's create a simple multi-turn conversation loop so we can ask follow up questions. Here's an overview of how this works:

- Initializes a conversation with a welcome message.

- Enters a loop where it prompts the user for input, sending this input to the Anthropic's API alongside any previous conversation history.

- Properly formats and sends user inputs as messages with a

userrole and processes responses from theassistant, appending them back into the conversation history to ensure context is maintained. - Stops the conversation when the user types "quit".

# Define the initial context/system message for the conversation

system_message = "You are a venture capital analyst designed to help users discover & analyze early-stage startups."

# Print a welcome message

print("Welcome to your conversation with Claude! Type 'quit' to end the conversation.")

# Initialize the messages list for conversation history; this will store all interactions

messages = []

# Start an infinite loop to process user input until they decide to quit

while True:

# Ask the user for their input/message

user_input = input("You: ")

# Check if the user wants to quit the conversation

if user_input.lower() == "quit":

break

# Append the user's message to the list of messages with the role 'user' and structured content

messages.append({

"role": "user",

"content": [{"type": "text", "text": user_input}]

})

# Call the Anthropics API to generate a response based on the conversation history

try:

message_response = client.messages.create(

model="claude-3-opus-20240229",

max_tokens=1000,

temperature=0,

system=system_message,

messages=messages

)

if message_response.content:

assistant_reply = message_response.content[-1].text

print("Assistant:", assistant_reply)

# Append the assistant's reply for the next turn to maintain context

messages.append({

"role": "assistant",

"content": [{"type": "text", "text": assistant_reply}]

})

except Exception as e:

print(f"An error occurred: {e}")

break

Nice.

Summary: Getting Started with the Claude API

In this guide, we saw how to make your first Claude API and start experimenting with the Workbench. In upcoming guides we'll expand on this and look at more advanced Claude usage, including prompt engineering, function calling, vision, and more.

With Claude beating other LLMs on major benchmarks, it's clear the model maker wars are heating up now that there are viable alternatives to GPT-4. That said, it's very likely (and rumoured) that OpenAI will release their GPT-5 model sooner than expected now that it's no longer the number one LLM in town...