This week in AI, Google introduced it's latest large language model (LLM): Gemini 1.5.

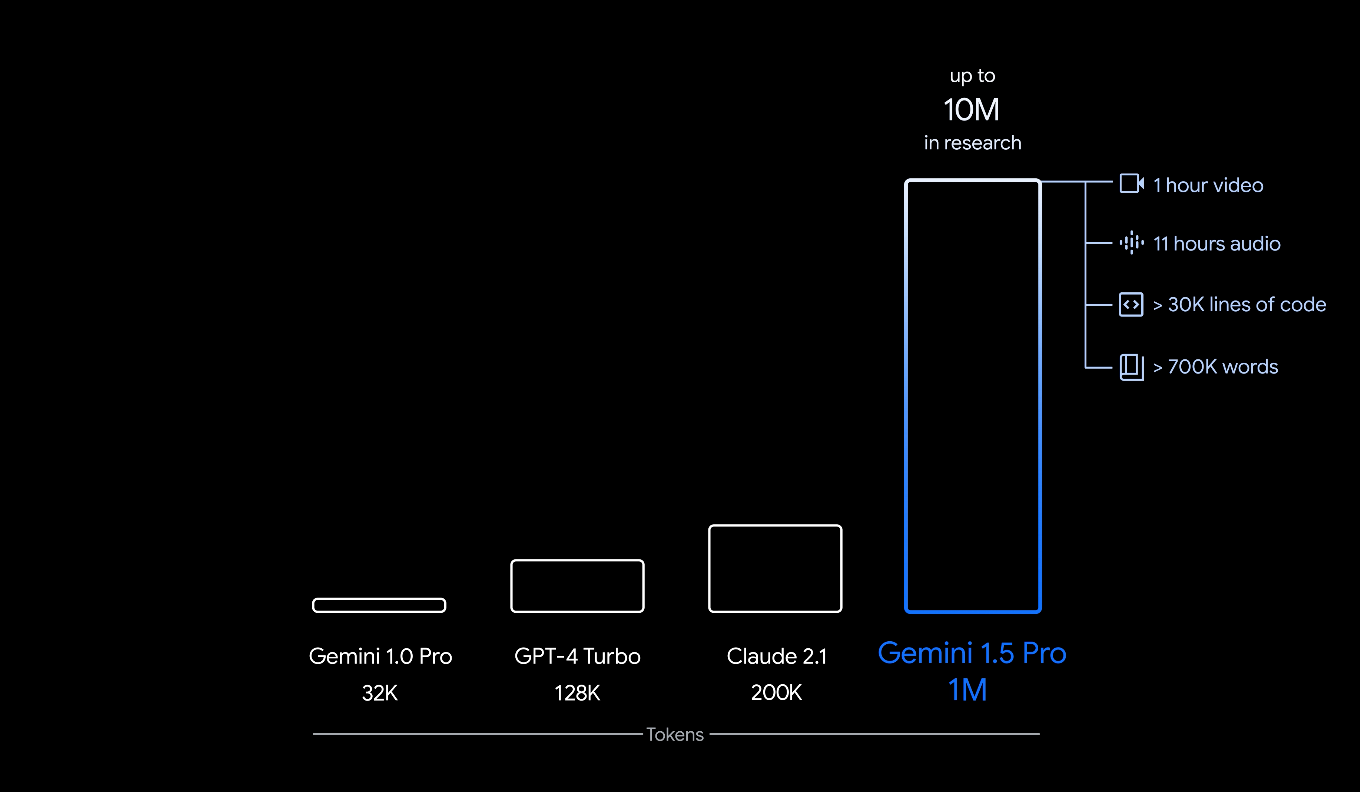

The new multimodal model has set a new benchmark for token windows—the amount of context you can feed into a single prompt—at an impressive 1 million tokens.

Another notable feature of the model is its use of both transformers and a Mixture-of-Experts (MoE) architecture, making it more resource-effective.

In this article, let's dive into the key features & use cases this 1m+ context window provides.

Key Features

Here are a few key features of Gemini 1.5:

- 1M Context: Significantly increased context window, managing up to 1 million tokens, surpassing previous models in handling extensive information.

- Computational Efficiency: It uses a Mixture-of-Experts (MoE) architecture, enhancing computational efficiency and reducing resource requirements for training and operation.

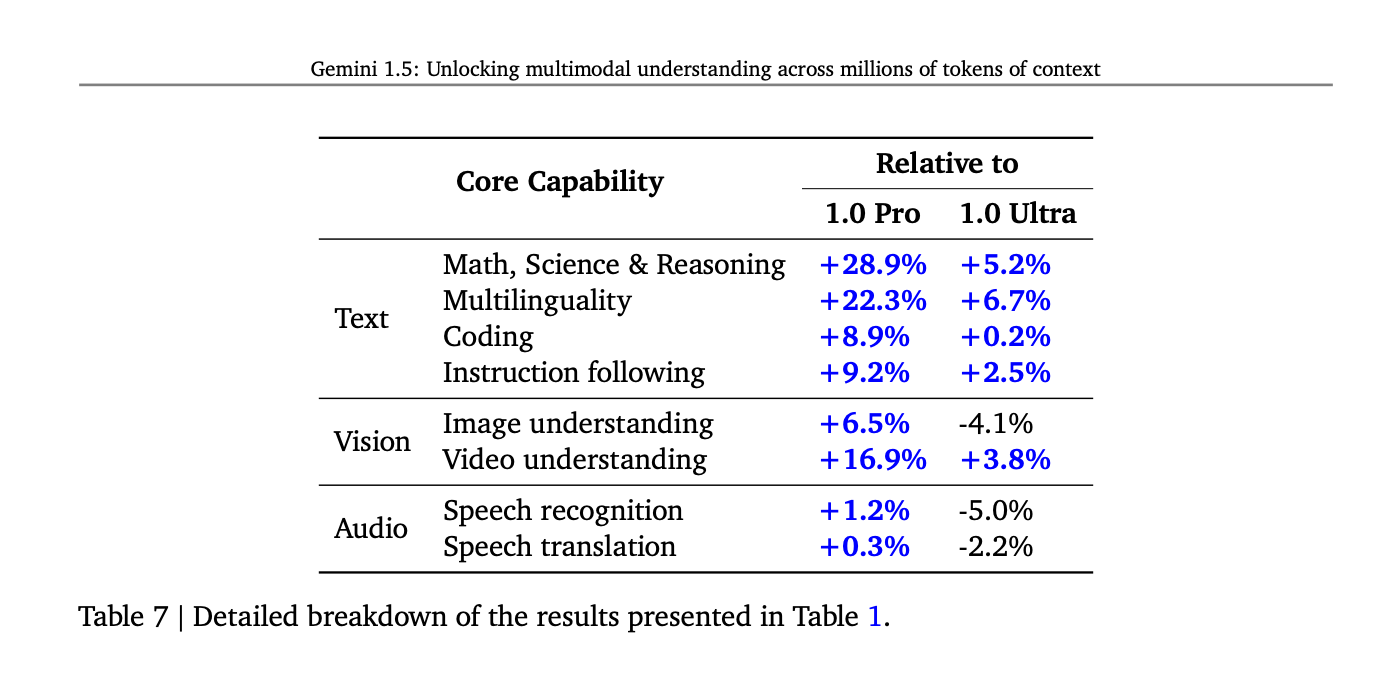

- Performance: Demonstrates superior performance across a range of evaluations, including text, code, image, audio, and video benchmarks. It shows notable improvements from Gemini 1.0 in coding challenges, multilingual tasks, and instruction following.

1M token context window

Now, what can you actually do with 1M context window? For context, OpenAI's latest model GPT-4 Turbo has a context window of 120k tokens.

In certain text-heavy industries like law, finance, and healthcare...this will be a game changer. As Google writes:

The bigger a model’s context window, the more information it can take in and process in a given prompt — making its output more consistent, relevant and useful.

Here's some reference of the amount of information you can fit in a single prompt with 1m tokens:

- Text: 700k words, or about 5 full textbooks

- Video: 1 hour

- Audio: 11 hours

- Coding: 30k lines of code

This breakthrough in long-context understanding opens up new avenues for AI applications, particularly in fields requiring the analysis of large volumes of text, code, or multimodal data.

Here are a few practical examples of what you can do with 1M context:

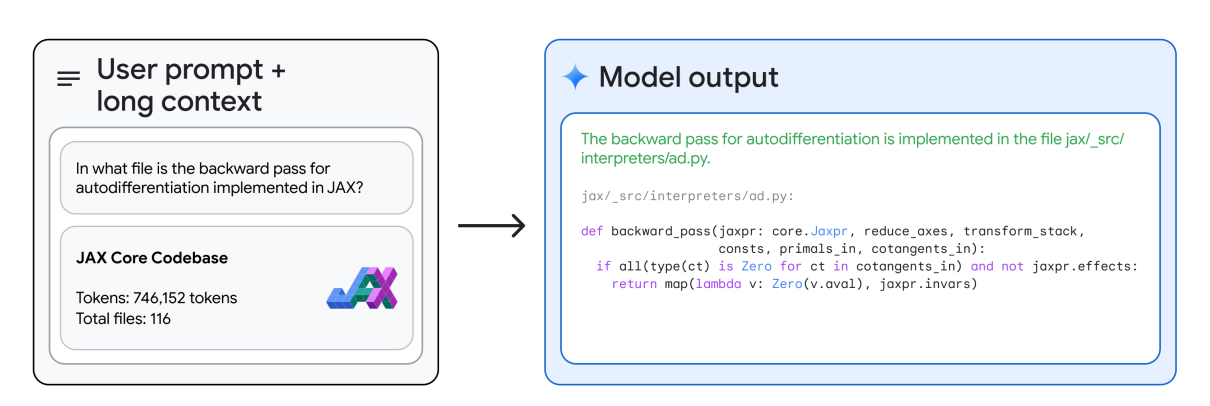

- Codebase Review: Assess millions of lines of code for optimization and security.

- Medical Research: Analyze large volumes of medical papers to uncover trends and insights.

- Legal Document Analysis: Quickly digest and summarize extensive contracts and legal texts.

- Financial Market Analysis: Evaluate multiple financial reports for market insights.

The list goes on...

Mixture-of-Experts (MoE) & Transformer Architecture

Another interesting aspect of Gemini 1.5 is its use of both MoE and transformer architecture. Here's a brief overview of what each of those do:

Transformers

At the heart of Gemini 1.5 are Transformers, a type of model architecture that's especially good at handling sequences of data, like sentences in a text or series of video frames. They excel in understanding the context and relationships within the data, making them ideal for tasks in natural language processing. Transformers in Gemini 1.5 help it to grasp the complex patterns in large datasets, from written text to images and sounds.

Mixture-of-Experts (MoE)

Think of MoE as a team of specialists where each member (or "expert") is really good at a specific task. Instead of having one large model trying to do everything, MoE routes different parts of a problem to the best-suited experts. This way, only the necessary parts of the model are activated for a given task, making the entire system more efficient because it only uses resources (like computing power) where they're most needed.

Combining these two architectural innovations allows Gemini 1.5 to use its computational resources more effectively and perform more complex tasks without a proportional increase in resource consumption.

Summary: Gemini 1.5

The release of Gemini 1.5 is unquestionably a landmark achievement for the AI industry.

By surpassing the previous longest context window of 200k held by Claude, Gemini 1.5 has the potential to unlock many new capabilities for AI in business.

Unfortunately, like every Google "release" we don't actually have access to the model yet so we can only speculate how it compares to GPT-4 at this point...

You can learn more about Gemini 1.5 in the blog post and technical report here.