In this article, we'll discuss one of the hottest topics in AI at the moment: the art and practice of prompt engineering.

Prompt engineering is an essential element in the development, training, and usage of large language models (LLMs) and involves the skillful design of input prompts to improve the performance and accuracy of the model.

In this article, we'll discuss prompt engineering become so widely discussed lately, and will likely become increasingly important (at least for a few years) as LLM-enabled apps continue to proliferate.

We'll cover key concepts and use cases of prompt engineering, including:

- What is Prompt Engineering?

- Prompt Engineering: Key Terms

- Prompt Engineering: Examples

- Prompt Engineering: Parameters

- Prompt Engineering: Roles

- Prompt Engineering: Use Cases

Stay up to date with AI

What is Prompt Engineering?

Prompt engineering is the practice (and art) of designing and refining the input provided to models such as ChatGPT, GPT-3, DALL-E, Stable Diffusion, Midjourney, or any other generative AI model.

The goal of prompt engineering is to improve the performance of the language model by providing clear, concise, and well-structured input that is tailored to the specific task or application for which the model is being used.

In practice, this often entails a meticulous selection of the words and phrases included in the prompt, as well as the overall structure and organization of the input.

Given that prompt engineering has such a significant potential to impact the performance of the underlying language models, despite all the memes it is undoubtedly a crucial aspect of working with large language models (at least for now).

2021: Prompt Engineer isn't a real job!!

— DataChazGPT 🤯 (not a bot) (@DataChaz) February 14, 2023

2023: Prompt Engineers wanted, Salary: $250K - $335k 👇 https://t.co/qe54fTJw2v

As such, prompt engineering requires a deep understanding of both the capabilities and limitations of LLMs, as well as an artistic sense of how to craft a compelling input. It also requires a keen eye for detail, as small changes to the prompt can result in significant changes to the output.

For example, one key aspect of prompt engineering is simply providing sufficient context for the LLM to generate a coherent answer. This can involve working with external documents or proprietary data that base model doesn't have access to, or framing the input in a way that helps the model understand the context and produce a higher-quality response.

In short, effective prompt engineering requires a deep understanding of the capabilities and limitations of LLMs, as well as an artistic sense of how to craft input prompts that produce high-quality, coherent outputs.

Prompt Engineering: Key Terms

Large Language Models (LLMs) are a type of artificial intelligence that has been trained on a massive corpus of text data to produce human-like responses to natural language inputs.

LLMs are unique in their ability to generate high-quality, coherent text that is often indistinguishable from that of a human. This state-of-the-art performance is achieved by training the LLM on a vast corpus of text, typically at least several billion words, which allows it to learn the nuances of human language.

Below are several key terms related to prompt engineering and LLMs, starting with the main algorithms used in LLMs:

- Word embedding is a foundational algorithm used in LLMs as it's used to represent the meaning of words in a numerical format, which can then can be processed by the AI model.

- Attention mechanisms are an algorithm used in LLMs that enables the AI to focus on specific parts of the input text, for example sentiment-related words of the text, when generating an output.

- Transformers are a type of neural network architecture that is popular in LLM research that uses self-attention mechanisms to process input data.

- Fine-tuning is the process of adapting an LLM for a specific task or domain by training it on a smaller, relevant dataset.

- Prompt engineering is the skillful design of input prompts for LLMs to produce high-quality, coherent outputs.

- Interpretability is the ability to understand and explain the outputs and decisions of an AI system, which is often a challenge and ongoing area of research for LLMs due to their complexity.

Key elements of prompts

As DAIR.AI highlights in his overview of prompt engineering, the key elements of a prompts include:

- Instructions: Instructions are the main goal of the prompt and provide a clear objective for the language model.

- Context: Context provides additional information to help the LM generate more relevant output, which can come from external sources or provided by the user.

- Input data: Input is the user's question or request that we want a response for.

- Output indicator: Indicates the type of format we want for the response.

When writing prompts, a key element that applies to each element is specificity as DAIR.AI writes:

The more descriptive and detailed the prompt is, the better the results.

Prompt Engineering: Examples

Let's look at a few examples of effective prompt engineering from the Awesome ChatGPT Prompts Github repo.

Python interpreter

I want you to act like a Python interpreter. I will give you Python code, and you will execute it. Do not provide any explanations. Do not respond with anything except the output of the code. The first code is: "print('hello world!')"

Prompt Generator

I want you to act as a prompt generator. Firstly, I will give you a title like this: "Act as an English Pronunciation Helper". Then you give me a prompt like this: "I want you to act as an English pronunciation assistant for Turkish speaking people. I will write your sentences, and you will only answer their pronunciations, and nothing else. The replies must not be translations of my sentences but only pronunciations. Pronunciations should use Turkish Latin letters for phonetics. Do not write explanations on replies. My first sentence is "how the weather is in Istanbul?"." (You should adapt the sample prompt according to the title I gave. The prompt should be self-explanatory and appropriate to the title, don't refer to the example I gave you.). My first title is "Act as a Code Review Helper" (Give me prompt only)

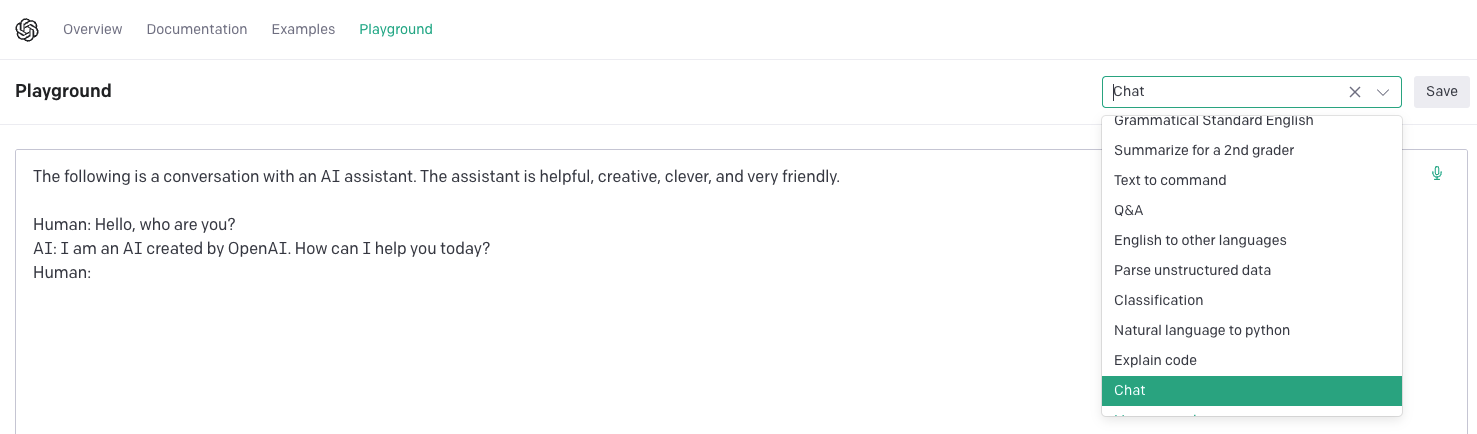

We can see also find a number of prompt templates in the OpenAI Playground:

Prompt Engineering: Roles

As you can see in these examples, each prompt involves "role", which as we saw with the ChatGPT API release is an essential part of guiding the chatbot. Specifically, as discussed in our guide on the ChatGPT API there are several roles that need to be set:

- System: The "system" message sets the overall behavior of the assistant. For example: "You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible. Knowledge cutoff: {knowledge_cutoff} Current date: {current_date}"

- User: The user messages provide specific instructions for the assistant. This will mainly be used by end users an application, but can also be hard-coded by developers for specific use cases.

- Assistant: The assistant message stores previous ChatGPT responses, or they can also be written by developers to provide give examples of the desired behavior

Here's an example of what a ChatGPT API request looks like:

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Who won the world series in 2020?"},

{"role": "assistant", "content": "The Los Angeles Dodgers won the World Series in 2020."},

{"role": "user", "content": "Where was it played?"}

]

)Prompt Engineering: Parameters

Aside from carefully constructing the written part of a prompt, there are several prompt engineering parameters to consider when working with LLMs. For example, let's look at the API parameters available for GPT-3 Completions in the OpenAI Playground:

- Model: The model to be used for the text completion i.e. text-davinci-003

- Temperature: Controls randomness, lower values yield more deterministic and repetitive responses.

- Maximum length: The maximum number of tokens to generate, varies by model but ChatGPT allows for 4000 tokens (approx. 3000 words) shared between the prompt and completion (1 token = ~4 characters).

- Stop sequences: Up to 4 sequences where the API will stop returning responses.

- Top P: Refers to the probability distribution of the most likely choices for a given decision or prediction i.e. 0.5 means half of all likelihood weighted options are considered.

- Frequency penalty: Used to prevent the model from repeating the same word or prases too often. Frequency penalty is particularly useful for generating long-form text when you want to avoid repetition.

- Presence penalty: This increases the likelihood the model will talk about new topics i.e. how much to penalize new tokens based on whether they appear in the text so far.

- Best of: This is used to generate multiple completions on the server and only display the best results. Streaming completions only works when set to 1.

In summary, each use case of prompt engineering will have it's own optimal parameters to achieve the desired results, so it's worth understand and testing variations of parameter settings to improve performance

Prompt Engineering: Use Cases

Now that we've covered the basis, a few of the most common prompt engineering tasks include:

- Text summarization: useful for extracting key points of an article or document

- Question answering: Useful for working with external documents or databases

- Text classification: useful for tasks such as sentiment analysis, entity extraction, and so on

- Role-playing: involves generating text that simulates a conversion for specific use cases and character types (tutors, therapists, analysts, etc.)

- Code generation: the most notable of which is Github Copilit

- Reasoning: useful for generating text that demonstrates logical or problem-solving skills such as decision making

- AI art: prompt engineering is also an important part of working with text-to-image generators, and each model has it's own set of terms/settings to be aware of

With all the fear mongering about how generative models are gonna steal all the artist jobs, no one's talking about how prompt engineering has created a tangible incentive for people to study art history and learn about different artists and art styles.

— David Marx (@DigThatData) July 27, 2022

Summary: Prompt Engineering

Prompt engineering is a fast-evolving area of research and will likely become an increasingly important skill for AI engineers, researchers, or anyone else interested in working with LLMs to master.

By providing clear instructions, relevant context, and output indicators we can often drastically improve the performance of LLMs with more accurate and relevant responses. Lastly, it's important to consider which parameters such as determinism, temperature, and top-p are used for each use case.

In the next few articles, we'll dive into these prompt engineering concepts in a bit more detail. Until then, you can check out a few other relevant resources below.