One of the most relevant applications of machine learning for finance is natural language processing.

While there certainly are overhyped models in the field (i.e. trading based off social media sentiment), there still are many useful applications of NLP in finance. For example, being able to search 10-Ks and 10-Qs for insights can save analysts thousands of hours in research time.

To that end, in this article we'll introduce the core concepts of natural language processing, and also look at several open source Python libraries we can use to efficiently analyze large corpuses of text data.

This article is based on notes from the Udacity AI for Trading program, and is organized as follows:

- Introduction to Natural Language Processing

- Text Processing

- Feature Extraction

- Scraping Data from Financial Statements

- Introduction to Regexes

- Introduction to BeautifulSoup

- Basic NLP Analysis

Let's get started.

Stay up to date with AI

1. Introduction to Natural Language Processing

In this section we'll look the difference between structure and unstructured language, as well as a high-level overview of the NLP pipeline.

Structured Languages

First, let's discuss what makes it difficult for computers to understand natural language. One of the main drawbacks of human language is the lack of a precisely defined structure.

To give you an examples of structure languages—mathematics is a precise language, and formal logic also uses a structured language. Another example of structured languages are scripting and programming languages.

Grammar

Structured languages like the above mentioned are easy to parse by computers since they have a precisely defined set of rules or grammar.

There are standard forms of expressing such algorithms and grammar, so a computer understands what is intended.

If a statement doesn't match the strictly prescribed grammar a computer will typically just give up and return a syntax error.

Unstructured Text

Human language that we use for communication also has defined grammatical rules, and in some cases we use simple structured sentences.

The majority of human discourse, however, is complex and unstructured.

Despite that, we have no problem understanding each other even though ambiguity is such a big part of language.

There are a few things computers can do to make sense of this unstructured text:

- They can process some level of words & phrases to identify keywords, parts or speech, named entities, dates, quantities, and so on

- They can also parse sentences to help extract meaningful information such as statements, questions, or instructions

- They can analyze documents at a high level to find frequent and rare words, assess the tone and sentiment, and cluster similar documents together

Building on each of these, computers can do a lot of useful things with unstructured text.

Context & Semantics

One of the harder challenges in NLP is understanding the the variability and complexity of sentences. As humans, we make sense of this variability by understanding the overall context or background of the sentence.

Another challenge of NLP is understanding the semantics of a sentence.

As humans, we implicitly apply knowledge and experience about the physical world to our understanding of language, but since there are countless scenarios that could be encountered in natural language, this is major challenge for computers.

NLP Pipelines

In order to develop an NLP pipeline, at a high-level we want to be able to:

- Start with raw text and process it

- Extract relevant features

- Build models to accomplish various NLP tasks

Each of these stages involves transforming text in some way and producing a result for the next stage. The workflow of building NLP pipelines is often not linear, and you may jump between building models and text processing.

Text Processing

To understand why we need to process text and can't simply feed it in directly, let's review if we're pulling text from an HTML web page.

The HTML will then serve as our raw input, so for the purpose of NLP we want to get rid of most, if not all the HTML tags and retain only plain text.

Other than processing web-based text, you may also need to process PDFs or other file formats. Regardless of the medium, the goal is remove any source specific markers or constructs not relevant to the NLP task at hand.

After we have the input in plain text format, we will also sometimes remove capitalization and punctuation so that words aren't treated differently.

Feature Extraction

After we have clean, normalized text we still need to do feature extraction.

Text data is represented on modern computers using an encoding such as ASCII or Unicode, which map characters to a number. Computers then store and transmit these numbers as binary.

Computers don't have a standard representation for words, internally they are just sequences of characters of ASCII or Unicode.

Coming up with a representation of text data that we can use for modeling depends partly on what type of model we want to use and the NLP task we're trying to accomplish.

For example, if we want to use a graph based model to extract meaning, we could represent words as symbolic nodes with relationships between them. This is what they do with WordNet, which is:

...a large lexical database of English. Nouns, verbs, adjectives and adverbs are grouped into sets of cognitive synonyms (synsets), each expressing a distinct concept. Synsets are interlinked by means of conceptual-semantic and lexical relations.

For statistical models, on the other hand, we need some form of numerical representation. This will also change based on whether we're trying to perform a document level task, in which case we may use a per document representation like bag-of-words or doc2vec.

If we're working with individual words and sentences, for example for machine translation, we need a word level representation like word2vec or GloVe.

Modeling

The last step involves designing a model, typically a statistical or machine learning model, fitting its parameters to trading data using an optimization procedure, and then using the model to make predictions on unseen data.

The benefit of using numerical features is that we can use almost any machine learning model including support vector machines, neural networks, and so on.

2. Text Processing

In this section we'll review how to read text data from various sources and prepare it for feature extraction.

In particular, we'll perform the following tasks:

- Clean it by removing unnecessary items such as HTML tags

- Normalize text by converting it to lowercase and removing punctuation and extra spaces

- Split the text into words or tokens and remove overly common words such as "the", otherwise known as stop words

- Learn how to identify different parts of speech, named entities, and how to convert words into canonical forms using stemming and lemmatization

After we've preprocessed the text, we can then move on to feature extraction.

Reading Text Data

There are many sources we can read text data from—the simplest is plain text file on our local computer.

Text data can also be a part of larger tabular data such as a CSV file, which we can read in easily using Pandas. Other times, we'll use an API to connect to another website such as Twitter.

Normalization

After we read in the text data we need to reduce some its complexity with normalization.

The first thing we will normalize in uppercase letters.

While uppercase letters are convenient for human readers, from the standpoint of machine learning algorithm it doesn't make sense to different between "Cat", "cat", and "CAT"—they all mean the same thing.

We can do this easily with the lower() method in Python.

Depending on the NLP task, we may then want to remove punctuation and special characters like "?" and "!". This is particularly useful if we're looking at documents as a whole, for example in a classification problem.

We can remove punctuation in Python as follows:

import re

# remove punctuation

text = re.sub(r"a-za-z0-9", "", text)Tokenization

GeeksforGeeks describes tokenization as the:

...process of tokenizing or splitting a string, text into a list of tokens. One can think of token as parts like a word is a token in a sentence, and a sentence is a token in a paragraph.

In other words, it is the process of splitting each sentence into a sequence of words.

The simplest way to do this is with the split() method:

# split text into tokens

words = text.split()We can also perform these operations with NLTK, or the Natural Language Toolkit. The most common way to split text with NLTK is with the word_tokenize function:

from nltk.tokenize import word_tokenize

# split text into words

words = word_tokenize(text)If we want to split text into sentences, we can use NLTK's sent_tokenize function:

from nltk.tokenize import sent_tokenize

# split text into sentences

sentences = sent_tokenize(text)There are other tokenizers such as a regular expression tokenizer, which removes punctuation and perform tokenization in a single step.

Cleaning

As mentioned, the text we read in from online sources almost always needs to be cleaned.

One way we can parse HTML is with Beautiful Soup, which is a Python library for pulling data out of HTML and XML files that will be discussed in more detail later.

We can extract text from an HTML page with the get_text() method:

from bs4 import BeautifulSoup

# remove HTML tag

soup = BeautifulSoup(r.text, "html5lib")

print(soup.get_text())Stop Word Removal

Stop words are uninformative words such as "the", "in", "at", and so on.

We often want to remove these to reduce the complexity that we have to deal with.

To remove stop words, we can use a Python list comprehension as follows:

from nltk.corpus import stopwords

# remove stop words

words = [w for w in words if w not in stopwords.words("english")]Part-of-Speech Tagging

We can also tag parts of speech as nouns, pronouns, verbs, adverbs, etc. in order to better understand what is being said.

We can also point out relationships between words and recognize cross references.

To do this with nltk we can pass in tokens into the pos_tag function, which returns a tag for each word identifying parts of speech.

from nltk imort pos_tag

# tag parts of speech

sentence = word_tokenize("I always lie down to tell a lie")

pos_tag(sentence)Named Entity Recognition

Named entities are typically noun phrases that reference a specific noun, object, person, or place.

To do this, we first need to tokenize and tag parts of speech, then we can use the ne_chunk function:

from nltk import pos_tag, ne_chunk

from nltk.tokenize import word_tokenize

# recognize named entities

ne_chunk(pos_tag(word_tokenize("Antonio joined Udacity Inc. in California")))Named entity recognition is often used to index and search for news articles, for example if you're searching for certain companies.

Stemming & Lemmatization

Now let's review two ways to normalize different variations and modifications of words.

Stemming is the process of reducing a word to its stem or root form. For example, "jumping", "jumped", and "jumps" can all be reduced to "jump". This again reduces complexity while retaining the essence of the sentence.

NLTK has a few stemmers to choose from including PorterStemmer, SnowballStemmer, and others.

Lemmatization is another technique to reduce words to a normalized format. In this case, the transformation actually uses a dictionary to map different variants of a word back to its root.

The default NLTK lemmatizer WordNetLemmatizer uses the WordNet database to reduce words to their root form.

3. Feature Extraction

Now that we've cleaned and normalized our text data, we need to transform it into features that can be used for modeling.

To do so, we'll review the following feature extraction techniques:

- Bag of Words

- TF-IDF

- One-Hot Encoding

- Word Embeddings

- Word2vec

- GloVe

- t-SNE

Bag of Words

Bag-of-words treats each document as an unordered collection, or "bag", of words.

To transform a document to a bag of words we apply the text processing steps mentioned above: cleaning, normalizing, etc., and then treat the tokens as an unordered collection or set.

Another useful approach is to turn each document into a vector of numbers, representing how many times each word occurs.

First, we collect all the unique words in the corpus to form the vocabulary. We then let these words form the vector element positions, or columns of a table. We then assume each document is a row and count the number of occurrences of each word in it.

We can think of this as a Document-Term matrix and each element represents term frequency.

TF-IDF

A limitation of bag-of-words is that it treats each word equally. In reality, we know that certain words occur more frequently in certain type of documents.

We can compensate this for counting the number of documents that each word occurs, or the document frequency.

Dividing the term frequency by the document frequency we then get a metrics proportional to the frequency of term occurrence and inversely proportional to the number of documents.

Thus, it highlights words that are more unique to a document, which may make it better at characterizing it.

The TF-IDF transform is simply the product of two weights:

tfidf(t,d,D)=tf(t,d)⋅idf(t,D)

where:

- tf(t,d) is the term frequency

- idf(t,D) is the inverse document frequency

One-Hot Encoding

Both the representations we've looked at try to characterize a document as one unit. Typically, this means the type of inference we can make is at the document level.

To extract features at a deeper level we need to come up with a numerical representation of each word.

One-hot encoding is one way to do this where we treat each word as a class and assign it a vector that has a single pre-defined position for that word, and zero otherwise.

This is similar to bag-of-words, except we have a single word in each bag and build a vector for each one.

Word Embeddings

If we have a large number of words to deal with in the document, one-hot encoding breaks down since the size of our word representation grows with the number of words.

Word embedding is a way to control the size of our word representation by limiting it to a single fixed-size vector.

To do this, we find an embedding for each word in some vector space. For example, if two words are similar in meaning—such as "kid" and "child"—they should be closer together.

Word2Vec

One of the most popular examples of word embedding used in practice is called word2vec, which is a group of related models used to produce word embeddings.

As the name suggests, it transforms words to vectors which works as follows:

These models are shallow, two-layer neural networks that are trained to reconstruct linguistic contexts of words. Word2vec takes as its input a large corpus of text and produces a vector space, typically of several hundred dimensions, with each unique word in the corpus being assigned a corresponding vector in the space.

Word2Vec has the following properties:

- It yields a robust, distributed representation

- The vector size is independent of vocabulary

- It is also ready to be used in deep learning architectures

GloVe

Another type of forward embedding is called GloVe, or Global Vectors for Word Representation, which is:

...an unsupervised learning algorithm for obtaining vector representations for words. Training is performed on aggregated global word-word co-occurrence statistics from a corpus, and the resulting representations showcase interesting linear substructures of the word vector space.

In other words, GloVe tries to directly optimize the vector representation of each word using co-occurence statistics.

t-SNE

In order to capture sufficient variations in natural language, word embeddings need to have high dimensionality. This high dimensionality makes them difficult to visualize.

T-SNE, or T-distributed Stochastic Neighbor Embedding is a dimensionality reduction technique that maps high dimensional vectors to a lower dimensional space.

It's similar to principal component analysis (PCA), except when performing the transformation it tries to maintain the relative distance between objects.

This property makes it particularly useful for visualizing word embeddings.

4. Scraping Data from Financial Statements

Now that we have an overview of NLP concepts, let's look at a real-world use case: pulling data from financial documents.

In particular, we will look at where we can pull 10-Ks from that may be useful for trading.

Financial Statements

As you probably know, publicly traded companies are required to file periodic reports to the relevant governing bodies.

The most common reports are called 10-Ks, which are filed annually, and 10-Qs, which are filed quarterly.

10-Ks are separated into four main sections:

- Business Overview: this includes information about risks and possible legal proceedings

- Markets & Finance: this includes a discussion about market conditions, projections, and highlights of the report

- Governance: this includes information on board members and compensation

- Full Financial Report: This contains the full details about the company's income, expenses, cash flow, and so on.

In order to apply natural language processing to 10-Ks, in future articles we'll make use of EDGAR, which stands for Electronic Data Gathering Analysis Retrieval.

Retrieving 10-Ks from EDGAR

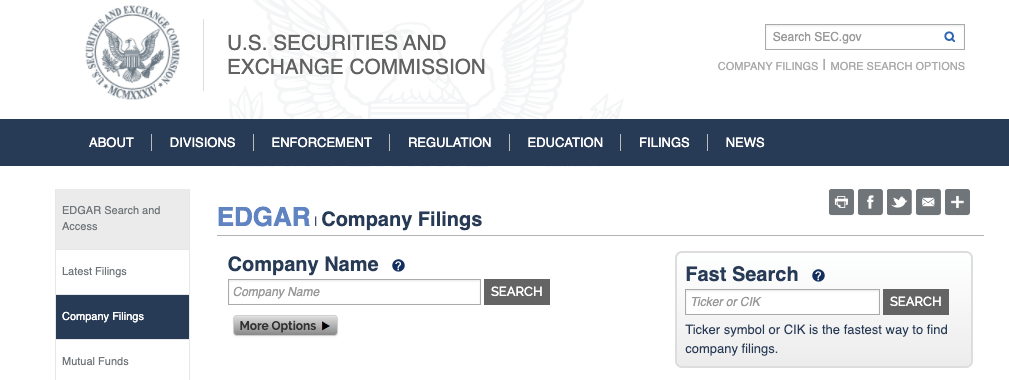

To access to the EDGAR database, you can first go to SEC.gov and select "Edgar - Search & Access" in the "Filings" section.

Next we can click on "Company Filings" to find information on specific companies:

We can access a company by either entering the ticker or CIK, which is the Central Interest Key. A few examples of CIKs include:

cik_lookup = {

'AMZN': '0001018724',

'BMY': '0000014272',

'CNP': '0001130310',}We won't cover pulling in financial data from the SEC in detail in this article, but you can learn more about it project 5 of the Udacity course.

5. Introduction to Regexes

We can use regular expressions—or Regexes—to extract useful information, such as scanning for negative terms such as "bankruptcy", or positive terms such as "increasing profits".

A regular expression allows us to search for patterns in text files.

This differs from a literal search, which only looks for exactly what is typed, whereas a Regex search allows us to search for particular patterns that appear—for example, we can search for any phone numbers that come up instead of searching for one. specific phone number.

Raw Strings

Before we create a Regex in Python, let's look at raw strings.

In Python, string literals are specified using single or double quotes, and the \ is used to escape characters that have special meaning, such as \n for newline or \t for tab.

In some cases, we want the print() function to interpret the string literally, which means we don't want characters prefaced by a backslash to be interpreted as special characters.

These strings are known as raw strings, and work as follows:

# raw strings

print(r'Hello\n\tWorld')We will use raw strings with our regular expressions as they use \ character to indicate their own special characters. By using raw strings we avoid the issue of Python interpreting the special characters the wrong way.

Finding Words & Letters Using Regexes

Now let's look at how to find letters and words in a string using regular expressions.

To do this, we'll use the re module from Python's standard library.

To find all the single letters in a sentence, for example to find all the a's in some sample text, we can use:

regex = re.compile(r'a')

matches = regex.finditer(sample_text)

for match in matches:

print(match)The finditer() method returns an iterator, which means we can look through it to print all the matches.

Using Regexes to Search for Simple Patterns

We can use the following special sequences to search for simple patterns:

\d- match any decimal digit\D- match any non-digit character\s- match any whitespace character\S- match any non-whitespace character\w- match any alphanumeric character and the underscore\W- match any non-alphanumeric character

Word Boundaries

Another special sequence we can create using the backslash is \b, which determines word boundaries.

A word is defined as a sequence of alphanumeric characters.

A boundary is defined as white space, non-alphanumeric characters, or the beginning or end of a string.

For example, if we want to find instances where we have the word "class", but only with whitespace before the word—such as in the word "classroom", but not the word "subclass"—we can use:

regex = re.complile(r'\bclass')

matches = regex.finditer(sample_text)

for match in matches:

print(match)Simple Metacharacters

Now let's look at the following metacharacters: . ^ $

- The dot

.matches any character except for newline\ncharacters - The caret

^matches a sequence of characters only when they appear at the beginning of a string - The

$is used to match a sequence of characters when they appear at the end of a string

Substitutions

If we want to replace patterns within a string we can use the .sub() method, for example if want to replace "&" with "and" we can do so as follows:

# create a regular expression object with regex '&'

regex = re.compile(r'&')

# substitute '&' with 'and'

new_text = regex.sub(r'and', sample_text)5. Introduction to BeautifulSoup

Now that we know how to use regular expressions to find patterns of text.

In some cases, the text will already be formatted as an HTML web page, which can be difficult to use regexes on if you're starting from scratch.

Luckily, we can make use of the BeautifulSoup library, which allows us to pull data out of HTML and XML files. Not all 10-Ks, however, will be in HTML or XML format, so that is something to keep in mind.

Parsers

In BeautifulSoup, the role of the parser is to build a data structure in a hierarchical tree format that can easily be searched.

This means the parser divides complex files into simpler parts and tracks how each part relates to each other.

The library supports multiple parsers, but we'll be using the lxml parser, which must be installed in the terminal with pip install lxml.

Parsing an HTML File with BeautifulSoup

In order to parse an HTML file with BeautifulSoup, we need to pass the file into the constructor as follows: BeatifulSoup(file, 'parser'), which returns a BeautifulSoup object.

This constructor turns the file into a complex tree of Python objects as mentioned.

We can then search this BeautifulSoup objet with a variety of methods.

Navigating the Parse Tree

To easiest way to navigate the parse tree is by using the HTML or XML tags.

For example, we can access the title in our <title> tag in our file object as follows:

from bs4 import BeautifulSoup

with open('./sample.html') as f:

file = BeatifulSoup(f, 'lxml')

title = file.head.title.get_text()Searching the Parse Tree

BeautifulSoup provides various methods to search the parse tree, although for now we'll just look at the .find_all() method.

The .find_all(filter) method searches the entire file for the given filter, which can be a string with the HTML or XML tag, a tag attribute, or a regular expression.

For example, assuming we already have our sample text loaded into the page_content object, we can find all the <p> tags as follows:

p_list = page_content.find_all('p')We can also search for multiple tags at once by passing them in as a list to the .find_all() method.

Searching by Class & Regexes

If we want to search by class, for example class="h1style" we can't simply use the .find_all() method since the CSS attribute class is a reserved in Python.

Instead, we can search for the class attribute with BeautifulSoup using the keyword class_, for example:

# print tags with attibute class_ = 'h1style'

for tag in page_content.find_all(class_='h1style')

print(tag)We can also search for regular expressions using the .find_all() method as follows (assuming we've already opened the HTML file):

# print tag names of all tags that contain the letter 'a'

for tag in page_content.find_all(re.compile(r'a')):

print(tag.name)

Requests Library

Now that we've reviewed how to use BeautifulSoup to extract data from HTML and XML files, we still need to access the websites to scrape data.

To do this we'll use the requests library to get data. For example, we can scrape any website as follows:

import requests

# create a Response object

r = requests.get('https://yourwebsite.com')

# get HTML data

html_data = r.texthtml_data is a string of the HTML data from the website, which can then be passed to the BeautifulSoup constructor.

6. Basic NLP Analysis

Now that we've reviewed several libraries for natural language processing, let's look at several ways we can turn text from 10-Ks into useful data that can be used for trading strategies.

We'll focus on three metrics for financial analysis:

- Readability: this refers to how easy the text is for reading

- Sentiment: how positive or negative the text is

- Similarity: this describes changes in periodic documents over time

Readability

10-Ks are long and complicated documents. Since this complex text may be related to potential risk factors for the company, we want a way to quantify its complexity.

One way to do this is to calculate readability indices, which capture how easy or complex a given document is to read.

Readability indices are calculated based on two observations:

- Longer sentences tend to be less easy to read

- Longer words also tend to be less easy to read

Using these observations we can calculate two common readability indices:

According to these two readability metrics, 10-Ks are actually more complex than articles on theoretical physics.

Bag-of-Words

In addition to just analyzing the complexity of the text, we also want deeper insights into the word compositions.

For example, we can uncover potential risk factors by looking for words like "risks", "lawsuits", "bankruptcy", and so on.

One way we can quantify these word compositions is by condensing a document into a bag of words, which as discussed treats each document as an unordered collection, or "bag", of words.

One potential issue with using bag-of-words on 10-Ks is that is ignores the order of words, which could mean completely different things. That said, it is still a good starting point for analysis as its useful in its simplicity.

Sentiment Analysis

After we have a bag-of-words, we can then categorize the bags based on sentiment.

For example, the risky terms mentioned above are indicate potentially negative issues with the company. Conversely, terms like "breakthrough", "innovation", and "approval" can be indicative of positive developments.

To do this, we can use a wordlist so that all the words matching the list can be moved to its corresponding category.

We can then get a sentiment score by calculating the proportion of words that match the positive and negative wordlists. We can also create other categories such as a legal or uncertainty category.

Two useful wordlists include the Harvard Wordlist for general document processing and the Loughran-McDonald Wordlist for financial documents.

Frequency Reweighting with TF-IDF

So far we've just counted each word with equal weight, although in language certain words are naturally more common than others.

To account for this we can reduce the importance of frequently used words in the bag-of-words.

This can be done with TF-IDF, which as mentioned above highlights words that are more unique to a document, which may make it better at characterizing it.

Similarity Metrics

Since financial documents are released periodically, another useful metric to quantify are the changes over time.

We can track readability and sentiment over time, but these metrics typically only captures one specific feature of a document. Instead, we want to find a way to directly compare two documents.

To do this, we can use similarity metrics to converts two documents into a number representing the degree of similarity.

We can construct this by converting a document into a list of TF-IDF weights, which are vectors.

We can then use linear algebra and construct a matrix.

A common metric we can use is the cosine similarity, which is:

...a measure of similarity between two non-zero vectors of an inner product space. It is defined to equal the cosine of the angle between them, which is also the same as the inner product of the same vectors normalized to both have length 1.

Another common metric is the Jaccard similarity coefficient, which is a statistic used for gauging the similarity and diversity of sample sets.

Summary: Natural Language Processing with Python

In this guide, we introduced the core concepts of natural language processing and Python. After that, we looked at the NLP pipeline including text processing and feature extraction.

We then looked at several useful tools to pull information from text, including regexes and the BeautifulSoup library.

In subsequent articles, we'll focus on model development using recent advances in machine learning to analyze and and extract useful insights from text data.