In our previous guides on prompt engineering, we saw how small tweaks to the input can result in notable differences in the performance of large language models.

In case you're unfamiliar with the topic...

Prompt engineering refers to the process of refining a language (or other generative AI) model's input to produce the desired output, without updating the actual weights of the model as you would with fine-tuning.

For example, as highlighted in our guide to improving responses & reliability, while much of this has been integrated into GPT-4, an interesting technique for GPT-3 was simply adding "Let's think step by step" for problem solving tasks:

On a benchmark of word math problems, the Let's think step by step trick raised GPT-3's solve rate massively, from a worthless 18% to a decent 79%! In this guide, we'll go beyond the simple "think step-by-step" tricks and review a few of the more advanced prompt engineering concepts & techniques, including:

- The Basics: Zero Shot and Few Shot prompting

- Chain of Thought (CoT) Prompting

- Self Consistency

- General Knowledge Prompting

- ReAct

By combining carefully designed input prompts with these more advanced techniques, having the skillset of prompt engineering will undoubtedly give you an edge in the coming years...

Stay up to date with AI

Zero-Shot & Few Shot Prompting

Before getting to a few more advanced techniques, let's first review the basics.

Zero-Shot Prompts

Zero-shot prompting refers to simply asking a question or presenting a task to an LLM without providing prior exposure to the task in training.

With zero-shot prompting, we're expecting the model to respond without providing examples of the desired output or additional context to the task at hand. For example:

Analyze the sentiment of the following tweet and classify it as positive, negative, or neutral.

Tweet: "Prompt engineering is so fun."

Sentiment:Few Shot Prompts

As the name suggests, few shot prompting refers to presenting a model with a task or question along with a few examples of the desired output.

For example, if we want to come up for a list of new potential features for a SaaS product based on customer problems, we could use the following few shot prompts to steer the model in the direction we want":

### Identify suitable features for a project management software based on the given problems:

Problem 1:

Teams have difficulty in tracking the progress of their projects.

Feature 1:

Progress tracking dashboard with visual indicators and real-time updates.

Problem 2:

Collaboration among team members is not efficient.

Feature 2:

In-app chat and file sharing for seamless communication and collaboration.

Problem 3:

Project managers struggle to allocate resources effectively.

Feature 3:

Resource management module with workload analysis and smart resource allocation.

---

Problem 4:

Managing and prioritizing tasks is time-consuming.

Please suggest a feature to address Problem 4:

Few shot prompting is useful for tasks that require a bit of reasoning, and is a quick way to steer the model towards your desired output.

A closely related topic is few shot learning, which is discussed in the GPT-3 paper called Language Models are Few-Shot Learners:

Recent work has demonstrated substantial gains on many NLP tasks and benchmarks by pre-training on a large corpus of text followed by fine-tuning on a specific task. While typically task-agnostic in architecture, this method still requires task-specific fine-tuning datasets of thousands or tens of thousands of examples. By contrast, humans can generally perform a new language task from only a few examples or from simple instructions - something which current NLP systems still largely struggle to do.

Here we show that scaling up language models greatly improves task-agnostic, few-shot performance, sometimes even reaching competitiveness with prior state-of-the-art fine-tuning approaches.

Chain of Thought (CoT) Prompting

Another important technique is chain of thought (CoT) prompting, which is used to use to improve performance on more complex reasoning tasks and facilitate more context-aware responses.

Proposed by Google in 2022, as the authors write:

We explore how generating a chain of thought -- a series of intermediate reasoning steps -- significantly improves the ability of large language models to perform complex reasoning. In particular, we show how such reasoning abilities emerge naturally in sufficiently large language models via a simple method called chain of thought prompting, where a few chain of thought demonstrations are provided as exemplars in prompting.

With CoT prompting, we tell the model to produce a few reasoning steps before it provides the final answer. For example, we could use CoT prompting in the context of customer churn as follows:

User: Q: A subscription-based software company has 1000 customers. This month, they acquired 200 new customers and lost 50 due to churn. What is the net customer growth rate for the company? Let's think step-by-step.

Another interesting paper on this topic is Automatic Chain of Thought Prompting in Large Language Models:

We propose an automatic prompting method (Auto-CoT) to elicit chain-of-thought reasoning in large language models without needing manually-designed demonstrations.

Self Consistency

Self-consistency is another interesting prompting technique that aims to improve chain of thought prompting for more complex reasoning problems.

As the authors of Self-Consistency Improves Chain of Thought Reasoning in Language Models write:

...we propose a new decoding strategy, self-consistency, to replace the naive greedy decoding used in chain-of-thought prompting. It first samples a diverse set of reasoning paths instead of only taking the greedy one, and then selects the most consistent answer by marginalizing out the sampled reasoning paths.

Self-consistency leverages the intuition that a complex reasoning problem typically admits multiple different ways of thinking leading to its unique correct answer.

In other words, self-consistency involves sampling from various reasoning paths with few shot chain of thought prompting and then using the generations to choose the best (i.e. most consistent) answer.

General Knowledge Prompting

A common practice prompt engineering is augmenting a query with additional knowledge before sending the final API call to GPT-3 or GPT-4.

A similar idea was proposed in the paper called Generated Knowledge Prompting for Commonsense Reasoning, except instead of retrieving additional contextual information from an external database (i.e. a vector database) the authors suggest using an LLM to generate its own knowledge and then incorporating that into the prompt to improve common sense reasoning.

As the authors write:

...we develop generated knowledge prompting, which consists of generating knowledge from a language model, then providing the knowledge as additional input when answering a question. Our method does not require task-specific supervision for knowledge integration, or access to a structured knowledge base, yet it improves performance of large-scale, state-of-the-art models...

ReAct

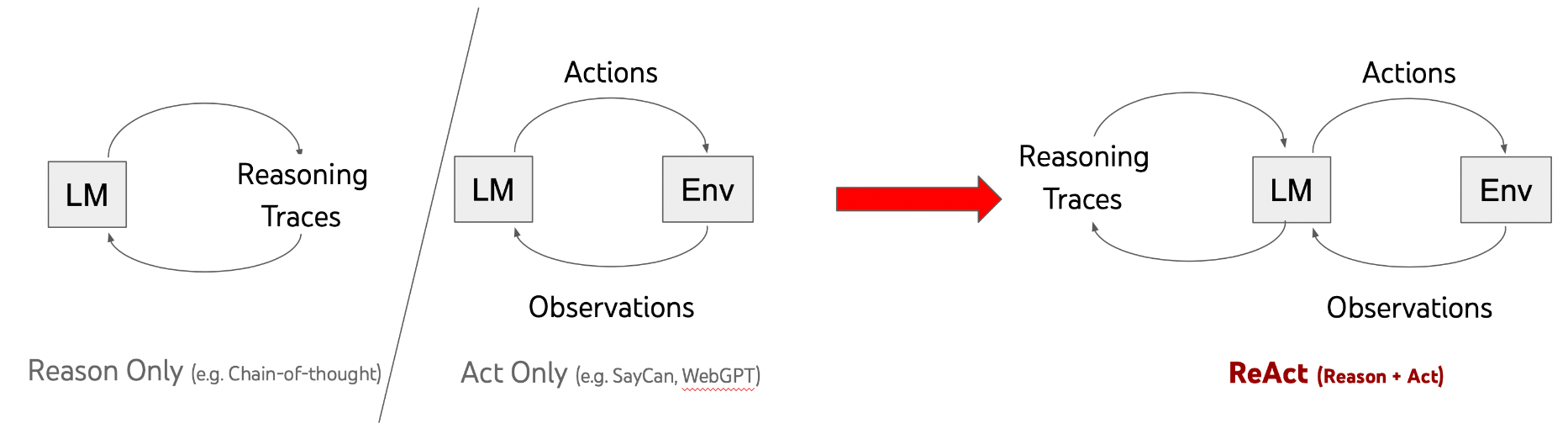

Proposed by Yao et al in 2022, ReAct: Synergizing Reasoning and Acting in Language Models is another interesting prompt engineering technique that involves LLMs generating both reasoning and task-specific actions in a unified manner. As the authors write:

we explore the use of LLMs to generate both reasoning traces and task-specific actions in an interleaved manner, allowing for greater synergy between the two: reasoning traces help the model induce, track, and update action plans as well as handle exceptions, while actions allow it to interface with external sources, such as knowledge bases or environments, to gather additional information.

In other words, it generating reasoning traces (e.g. chain of thought) to create and update actions plans, while the action and observations allow the model to retrieve information and interact with external tools and knowledge sources.

Summary: Prompt Engineering Techniques

While the field of large language models and generative AI seems to have no signs of slowing down, prompt engineering is undoubtedly going to be a valuable skillset for the foreseeable future.

In this guide, we looked at a few of the more interesting and advanced prompt engineering techniques, including:

- Zero shot and Few Shot prompting

- Chain of Thought (CoT) Prompting

- Self Consistency

- General Knowledge Prompting

- ReAct

Of course, we just scratched the surface with each of these techniques and papers, and there are many other interesting techniques. That said, we'll continue to keep you updated on the most exciting advances.

Until then, here are a few other excellent resources and papers on prompt engineering techniques.

Prompt Engineering Resources

- A Guide to Prompt Engineering

- Learn Prompting

- Prompt Papers

- Language Models are Few-Shot Learners

- Chain-of-Thought Prompting Elicits Reasoning in Large Language Models

- Self-Consistency Improves Chain of Thought Reasoning in Language Models

- Generated Knowledge Prompting for Commonsense Reasoning

- ReAct: Synergizing Reasoning and Acting in Language Models