Artificial intelligence is going to see a dramatic growth in adoption in many verticals in the coming years.

The currently popular implementation of AI inference and training in the cloud, however, is not suitable for many use cases.

This limitation has created a huge opportunity for "edge AI", which this leading edge AI company defines as:

Edge AI means that AI algorithms are processed locally on a hardware device. The algorithms are using data (sensor data or signals) that are created on the device.

In this article we will cover the main drivers that are shifting AI to the edge, how the technology stack for AI is changing to reflect the shift, and the market opportunity in edge AI.

In terms of the market for Edge AI, this Forbes article describes:

The edge is where real money is being invested right now, and putting AI there even to get a small sliver of increased productivity will have a massive impact.

This article will address the following questions:

- How is AI currently being implemented?

- Why is AI processing shifting to the edge?

- What are the use cases that will drive these changes?

- How big is the market opportunity in edge AI implementation?

Stay up to date with AI

1. Overview of AI

Artificial intelligence, and in particular deep learning, is one greatest technological breakthroughs in modern time.

The technology will enable many companies to increase their productivity and improve operational efficiency through the implementation of intelligent processes and automation.

The real breakthrough is that we can actually make sense of all the data generated by the roughly 25 billion connected devices worldwide, otherwise known as the Internet of Things (IoT).

In a nutshell, artificial intelligence is a new computing approach that allows machines to perform intelligent tasks with little to no assistance from humans.

Artificial intelligence all starts with gathering relevant data from the sensors, devices, and applications around us.

This data is then sent to a training engine to be structured, classified, and filtered in order to identify patterns, behaviours, and trends from all of our collective data points.

Once the patterns are identified they are sent to the inference engine, which is responsible for recognizing patterns, making sense of them, and taking intelligent actions.

Traditionally, both training and inference requires significant data processing, and for years this has prevented AI from taking off.

However, with the improvement of both processing and communication technology AI is now becoming entrenched in many industries.

2. Edge AI Market Size

Research in the field estimates that by 2023 there will be total of 3.2 billion devices shipped with AI enabled capabilities.

Examples of this include smart manufacturing devices in the industrial sector, building efficiency and automation devices, robotics monitoring, and many other industrial sensor devices.

Aside from smart manufacturing this research shows that consumer electronics will constitute the major of shipments, although industrial applications are catching up and present a major opportunity in the next 5 years.

3. How is AI Implemented?

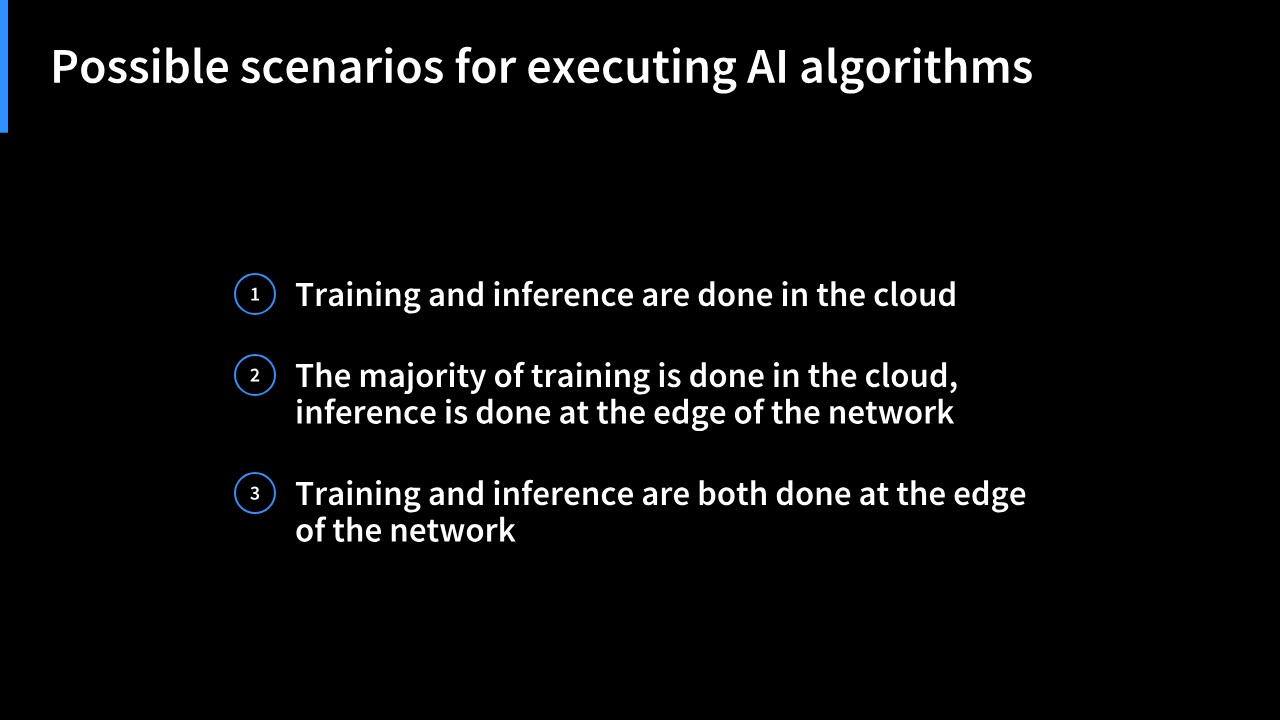

There are three possible scenarios for executing artificial intelligence algorithms.

Scenario 1: Training and inference are all done in the cloud

This is currently the most popular implementation for many industries that use crowd-sourced data. This scenario is highly dependent on constant communication between the devices generating data and the cloud executing the algorithms.

As this article on edge ai describes:

However, in many cases, inference running entirely in the cloud will have issues for real-time applications that are latency-sensitive and mission-critical like autonomous driving. Such applications cannot afford the roundtrip time or rely on critical functions to operate when in variable wireless coverage.

Scenario 2: Majority of training is done in the cloud, inference is done at the edge of the network

This type of scenario is typically used for applications that require immediate inference. Examples of this include voice recognition, storage management, and power management applications.

Scenario 3: Training & inference all all done at the edge of the network

This scenario is often used for mission-critical applications that require a near real-time performance and could be affected by latent communication or process execution. Examples of this industrial automation, robotics, and automotive applications (i.e. self-driving cars).

4. Cloud AI vs. Edge AI

Let's now look at how cloud AI and edge AI are positioned in the market, what value propositions they offer, and business models they sustain.

Cloud AI is often offered as a service by either charging for the amount of data trained and processed (either as a subscription or pay-per-use model) or value-added services such as process monitoring, infrastructure planning, cybersecurity, etc.

The established players in the cloud AI market include Amazon, Google, IBM, Microsoft, Oracle, Facebook, and Alibaba.

There is also an increasing number of smaller players who offer specialized cloud AI services that address specific use cases and verticals.

The advantages of cloud AI include volume, velocity, and variability - meaning the volume of data it can can process, the velocity at which it can process data, and the variability of data it can process.

The advantages of edge AI, on the other hand, include:

- The speed of processing

- Processing at the device

- The retention of data at the device

Edge AI can be split into three categories

1. On-premise AI server

- This implementation is based on software licensing and is often designed to support many use cases

2. Intelligent gateways

- This is where AI is performed at a localized gateway to the edge device

3. On device

- Which refers to hardware that can perform AI inference and training functions on the device. Training could also be delegated to the cloud here, with inference done on device.

5. Summary of Edge AI

The benefits of executing AI in the cloud include:

- Super computing capabilities

- Data can be extremely diverse and voluminous in the cloud

- Offers efficient and reliable training

A few of the drawbacks of cloud AI include:

- It is dependent on connectivity between devices and the cloud

- Data privacy and protection can be an issue

- It typically has a higher cost

The advantages of Edge AI include:

- Inference is done in near real-time, on the edge

- Inference is always on, meaning connectivity is not an issue

- Offers low latency for mission-critical tasks

- Can lead to lower AI implementation costs

The disadvantages of Edge AI include:

- Requires in-house knowledge and skills

- Requires data processing resources

In conclusion, there is a clear trend towards enterprise adoption of artificial intelligence implementation at the edge.