With the release of OpenAI's GPT-3.5/4 function calling, it's now significantly easier to translate natural language into API calls in a structured an reliable way.

As OpenAI writes:

Developers can now describe functions to gpt-4-0613 and gpt-3.5-turbo-0613, and have the model intelligently choose to output a JSON object containing arguments to call those functions.

This is a new way to more reliably connect GPT's capabilities with external tools and APIs.

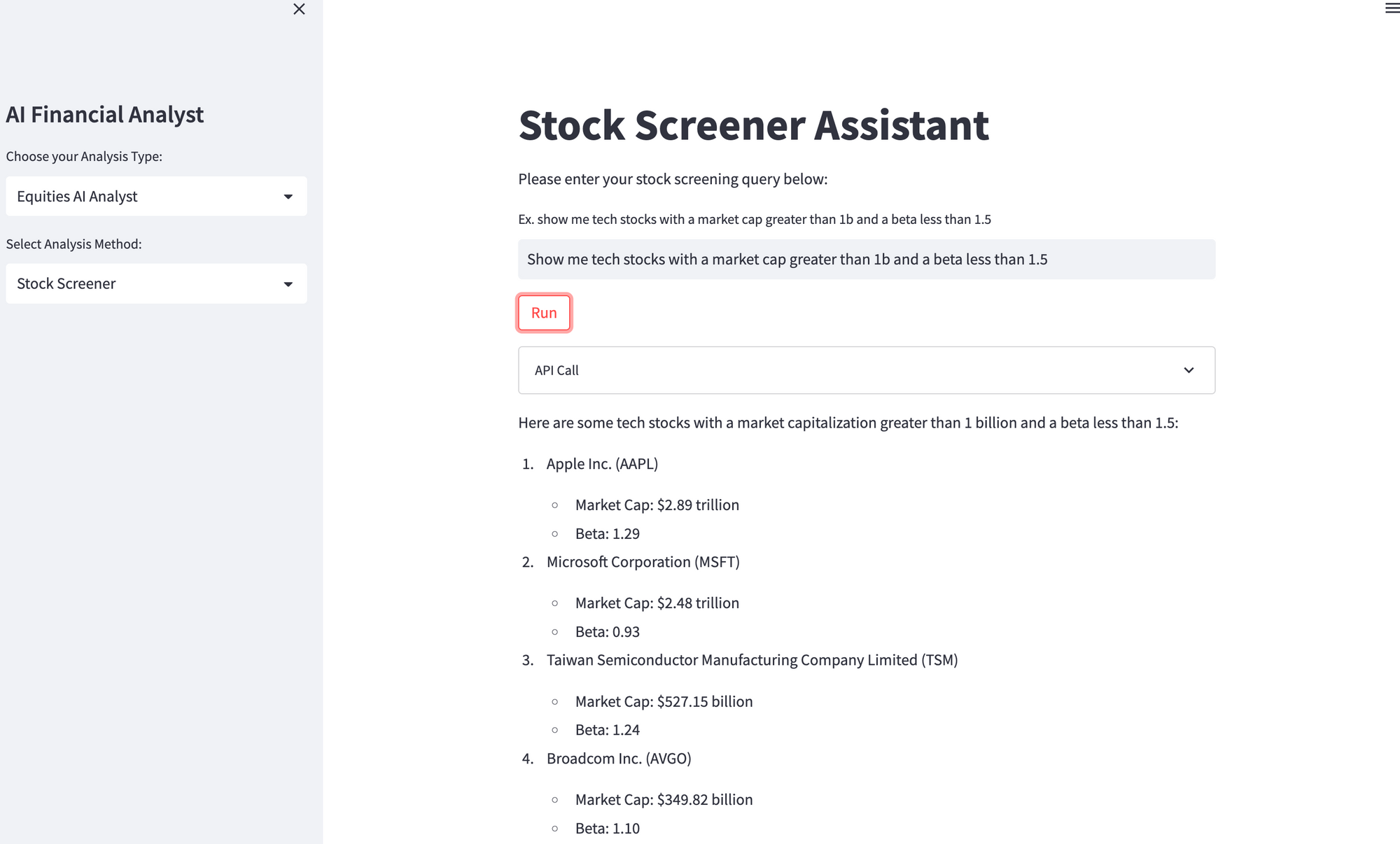

In this guide, we'll walk through a practical use case of function calling: turning natural language into a stock screening assistant. In particular, we'll use GPT's function calling to convert natural language into a structured stock screening API call at Financial Modeling Prep.

What is GPT Function Calling?

Before we get into the code, let's first review how GPT function calling works at a high level. As highlights in the documentation:

The latest models (gpt-3.5-turbo-0613andgpt-4-0613) have been fine-tuned to both detect when a function should to be called (depending on the input) and to respond with JSON that adheres to the function signature.

At a high-level, here are the steps we need to take to convert natural language into API calls with function calling:

- Define functions that GPT has access to: First, we need to define the functions that GPT will have access to call.

- Send the user's query & functions to GPT: Next, we need to make our first GPT API call to send these functions that it will have access to along with the user's query. In this API call, we'll specify the functions GPT has access to with with the new

functionsparameter. This includes a name of the function, description that is used to decide if/when to use it, and defining the function parameters in a JSON schema format. - Parsing the function call response: Next we check if the model decided to call a function, and if so, it generates a JSON string with the function's name and all the required arguments. This string is then parsed into a JSON object and the function is called with the extracted arguments.

- Make another GPT API call with the function response: Next, we need to feed the function response back to GPT as a new message object, along with the initial user's query. GPT-4 then uses this information to generate a user-friendly summary of the function's results.

Now that we have the high-level overview, let's see how we can apply this to the FMP API and build a natural language stock screening assistant.

Building a Stock Screening Assistant with GPT Function Calling

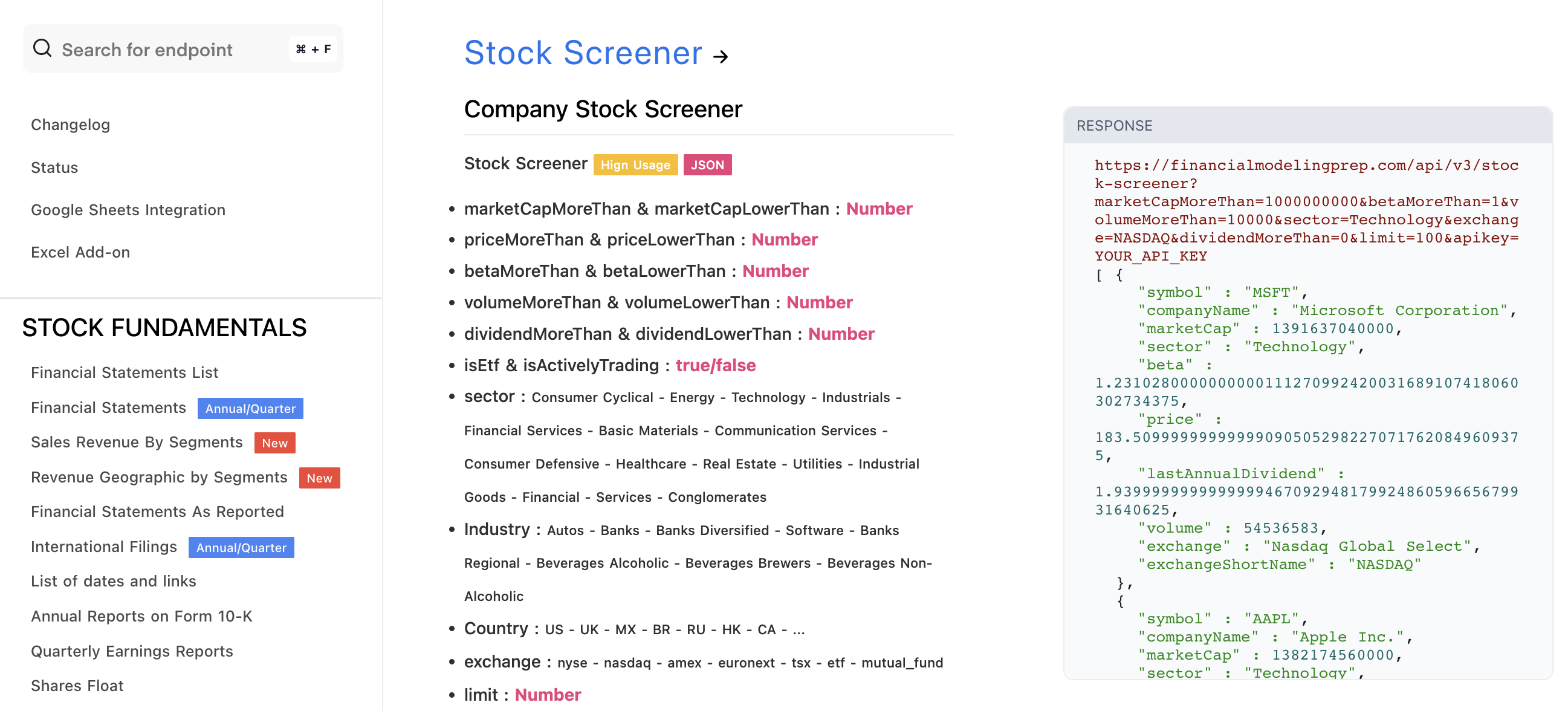

The stock screening assistant will use GPT-4's function calling capability and allow users to look for stocks in natural language based on the following parameters:

- Market Cap More or Less Than

- Price More or Less Than

- Beta More or Less Than

- Volume More or Less Than

- Dividend More or Less Than

- Is ETF

- Is Actively Trading

- Sector

- Industry

- Country

- Exchange

- Limit

Based on the user's query, GPT-4 will format the correct API call and the response will be fed into a second GPT call that is summarized for the user.

Step 0: Installs & Imports

First, we'll need to pip install streamlit and openai and import the following libraries into our new stock_screener.py. We'll also need to set your OpenAI API key and Financial Modeling Prep API key:

import streamlit as st

import openai

import requests

import json

from apikey import OPENAI_API_KEY, FMP_API_KEY # Be sure to replace these with your actual API keys

openai.api_key = OPENAI_API_KEYStep 1: Define stock screening function

Next up, we need to define our function that will call the FMP company stock screener endpoint.

Here's an overview of how the stock_screener function works:

- Initialize parameters: This function accepts a variety of parameters, such as market cap, price, beta, volume, dividends, etc. These parameters are used to define the criteria for the stock screener.

- API and endpoint setup: Next, we define a base URL that points to the

/stock-screenerendpoint at the FMP API, along with our API key. - Parameter dictionary: Next, we creates a dictionary called

paramsthat provides the API call parameters and the API key. We also filter out any any entries where the value isNone. - FMP API request: We then makes a GET request to the FMP API with the constructed parameters with the

requestslibrary. - Display the API call URL: This is optional, but we also create a UI element in Streamlit that provides the full URL of the constructed API call, this is so that we can quickly confirm or debug if there are any issues with the call.

- Response handling: Lastly, we parse the API's JSON response and return it as a string. If the API call was successful, it will contain a stringified JSON object with the stocks that meet the user's criteria.

def stock_screener(market_cap_more_than=None, market_cap_less_than=None, price_more_than=None, price_less_than=None, beta_more_than=None, beta_less_than=None, volume_more_than=None, volume_less_than=None, dividend_more_than=None, dividend_less_than=None, is_etf=False, is_actively_trading=False, sector=None, industry=None, country='US', exchange=None, limit=None):

base_url = 'https://financialmodelingprep.com/api/v3/stock-screener'

api_key = FMP_API_KEY

params = {

'apikey': api_key,

'marketCapMoreThan': market_cap_more_than,

'marketCapLowerThan': market_cap_less_than,

'priceMoreThan': price_more_than,

'priceLowerThan': price_less_than,

'betaMoreThan': beta_more_than,

'betaLowerThan': beta_less_than,

'volumeMoreThan': volume_more_than,

'volumeLowerThan': volume_less_than,

'dividendMoreThan': dividend_more_than,

'dividendLowerThan': dividend_less_than,

'isEtf': is_etf,

'isActivelyTrading': is_actively_trading,

'sector': sector,

'industry': industry,

'country': country,

'exchange': exchange,

'limit': limit

}

params = {k: v for k, v in params.items() if v is not None}

response = requests.get(base_url, params=params)

# Format the request URL and params for display

request_url = response.url

st.expander("API Call").write(request_url)

return json.dumps(response.json())Step 2: Send user's query & functions to GPT-4

Now that we've defined our stock screening function, we need to send this function along with the user's query to our first GPT API call.

Here, we'll define a run_conversation function, and the first part of the code works as follows:

1. User Message: The run_conversation function takes the user's message as input.

2. First GPT API Call

We then make our first GPT API call by calling the ChatCompletion.create() method, passing in the new GPT-4 function calling version model gpt-4-0613, the user's message, and a list of functions.

The list of functions specifies the stock_screener function and its parameters. Here we also set the function_call argument is set to "auto", which allows GPT to decide when to call the stock_screener function based on the context of the conversation and the description of the function.

3. GPT Response Handling:

Next, we retrieve the message response from the initial API call, which contains the AI-generated text that may contain the request to call the stock_screener function, or a direct response to the user's question.

def run_conversation(user_message):

response = openai.ChatCompletion.create(

model="gpt-4-0613",

messages=[{"role": "user", "content": user_message}],

functions=[

{

"name": "stock_screener",

"description": "Fetch stock screening data from the FMP API",

"parameters": {

"type": "object",

"properties": {

"market_cap_more_than": {"type": "number"},

"market_cap_less_than": {"type": "number"},

"price_more_than": {"type": "number"},

"price_less_than": {"type": "number"},

"beta_more_than": {"type": "number"},

"beta_less_than": {"type": "number"},

"volume_more_than": {"type": "number"},

"volume_less_than": {"type": "number"},

"dividend_more_than": {"type": "number"},

"dividend_less_than": {"type": "number"},

"is_etf": {"type": "boolean"},

"is_actively_trading": {"type": "boolean"},

"sector": {"type": "string"},

"industry": {"type": "string"},

"country": {"type": "string"},

"exchange": {"type": "string"},

"limit": {"type": "number"},

},

"required": [],

},

}

],

function_call="auto",

)

message = response["choices"][0]["message"]Step 3: Calling the Function and Formatting the Response

Next up, we're ready to make the function call (if GPT decides to do so), and generate a formatted response that can be fed back to GPT for a second time.

Here's an overview of how this code works:

1. Function Call Check:

First, we'll write an if statement to check if the GPT response contains a function_call. If it does, this means the model has determined to make a call to the stock_screener function based on the user's input.

2. Extract Function Name and Arguments:

If the model has chosen to call a function, the next step is to extract the function name and its' arguments from the message and store them in function_name and function_args, respectively.

3. Call the Stock Screener Function:

With the function name and args extracted, we can now call the stock_screener function. These arguments might include various parameters for stock screening such as market capitalization, price, beta, volume, dividend yield, and others.

The function's result, which is a JSON string containing stock screening data, is parsed back into a Python object and stored in the data variable.

4. Generate the Function Response:

Next, we create a new function_response variable to hold the response the AI assistant will return. We then loop over the the data list (each element contains info about a particular stock), and the relevant data is extracted and formatted into a readable string.

This string includes details like the stock's symbol, company name, market cap, sector, industry, beta, volume, exchange, and last annual dividend. The function_response is thus a summary of all stocks returned by the stock screener function, ready to be fed back into our second GPT API call.

if message.get("function_call"):

function_name = message["function_call"]["name"]

function_args = json.loads(message["function_call"]["arguments"])

data = json.loads(stock_screener(

market_cap_more_than=function_args.get("market_cap_more_than"),

market_cap_less_than=function_args.get("market_cap_less_than"),

price_more_than=function_args.get("price_more_than"),

price_less_than=function_args.get("price_less_than"),

beta_more_than=function_args.get("beta_more_than"),

beta_less_than=function_args.get("beta_less_than"),

volume_more_than=function_args.get("volume_more_than"),

volume_less_than=function_args.get("volume_less_than"),

dividend_more_than=function_args.get("dividend_more_than"),

dividend_less_than=function_args.get("dividend_less_than"),

is_etf=function_args.get("is_etf"),

is_actively_trading=function_args.get("is_actively_trading", "True"),

sector=function_args.get("sector"),

industry=function_args.get("industry"),

country=function_args.get("country"),

exchange=function_args.get("exchange", "NASDAQ,NYSE,AMEX"),

limit=function_args.get("limit", 10)

))

function_response = ""

for i in range(len(data)):

stock_info = data[i]

function_response += f"\n\nStock {i+1}:\n- Symbol: {stock_info['symbol']}\n- Company Name: {stock_info['companyName']}\n- Market Cap: {stock_info['marketCap']}\n- Sector: {stock_info['sector']}\n- Industry: {stock_info.get('industry', 'N/A')}\n- Beta: {stock_info['beta']}\n- Volume: {stock_info['volume']}\n- Exchange: {stock_info['exchange']}\n- Last Annual Dividend: {stock_info['lastAnnualDividend']}"

Step 4: Make a second GPT API call with the function response

The fourth step involves making another API call to the ChatCompletion() method with the function call response. Here's an overview of how this works:

1. Response Placeholder

First we create a placeholder holder response with st.empty(), whicih will be used later to display the assistant's responses to the user in real-time streaming format.

2. Creating a Second OpenAI Call

Next we make our second GPT API call is with the ChatCompletion.create() method, using the same model as before. The messages sent to the GPT-4 this time, however, are different: they include the original system message, user message, assistant's initial response, and the function's name and content in response to the user's request. The function's content is function_response, which contains the stock screening data processed in the previous step. The streaming option is set to True, which allows us to receive the response in chunks to enable real-time streaming.

3. Streaming Assistant's Responses

As OpenAI sends back responses in chunks (due to the streaming flag being set to True), the assistant's responses are accumulated in assistant_response and displayed in the Streamlit application in real-time. This is done using a for loop, which iterates over each chunk in the response.

- If the chunk contains a

role, the loop continues to the next chunk. This is because chunks with a 'role' are not part of the assistant's message. - If the chunk contains

content, the corresponding text is extracted and added toassistant_response, which is then displayed in the Streamlit application using theplaceholder_response.markdown()function, which updates the previously created placeholder with the latest assistant response.

Finally, the function returns the full assistant_response, which is the complete assistant's response generated from the second OpenAI API call.

# Create a placeholder to stream the assistant's responses to Streamlit

placeholder_response = st.empty()

# Make a new API call to OpenAI with the previous message's content and the function response, and apply streaming

second_response = openai.ChatCompletion.create(

model="gpt-4-0613",

messages=[

{"role": "system", "content": """You are a stock screening assistant designed to help users find stocks based on natural language inputs. You can find stocks based on market capitalization, price, beta, volume, dividend, ETF status, actively trading status, sector, industry, country, and exchange.

Follow these instructions in your response:

- Market capitalization should always be in trillion, billion, or million (i.e 1 billion instead of 1000000000)

- Also use human readable format for price, beta, volume, and dividend (i.e 1 billion instead of 1000000000)

- Use 2 decimal places for market cap, price, beta, volume, and dividend

- Write company names in human readable format for company names (i.e exclude Inc, Ltd, etc.)

- add $ in front of market capitalization, price, and dividend - but escape it with a backslash (i.e \$) for markdown formatting

- Only respond if there is an API response, if not say that there are no stocks that match the criteria

"""},

{"role": "user", "content": user_message},

{"role": "assistant", "content": message["content"] if message["content"] else 'Initiating function call...'},

{

"role": "function",

"name": function_name,

"content": function_response,

},

],

stream=True

)

# Display the response in Streamlit in real-time as chunks are received

assistant_response = ""

for chunk in second_response:

if "role" in chunk["choices"][0]["delta"]:

continue

elif "content" in chunk["choices"][0]["delta"]:

r_text = chunk["choices"][0]["delta"]["content"]

assistant_response += r_text

placeholder_response.markdown(assistant_response, unsafe_allow_html=True)

return assistant_responseStep 5: Building the Streamlit App

The last step is to build a simple Streamlit app to provide user's with an interface to interact with the stock screening assistant.

Here's an overview of how this works

- Define the application function: We create a function called

stock_screener_app(), which launche the Stock Screener Assistant application. - Set the title & instructions: Using

st.title(), the title of the application is set to 'Stock Screener Assistant'. Next,st.write()is used to display instructions to the user. In this case, the instruction is to enter a stock screening query. - Create a Text Input Field: We then create a text input field with

st.text_input()where the user can type their query. - Create a Run Button:

st.button()is used to create a 'Run' button. When this button is clicked, it triggers therun_conversation()function that was defined earlier. This function takesuser_inputas a parameter, which means that the conversation will be based on whatever query the user has entered. - Run the app: Finally, the script ends with a call to the

stock_screener_appfunction to run the application.

def stock_screener_app():

st.title('Stock Screener Assistant')

st.write('Please enter your stock screening query below:')

user_input = st.text_input("Ex. show me tech stocks with a market cap greater than 1b and a beta less than 1.5")

if st.button('Run'):

run_conversation(user_input)

if __name__ == "__main__":

stock_screener_app()Summary: GPT Function Calling

As we saw in this guide, the new function calling capability of GPT 3.5/4 makes converting natural language significantly easier.

While this functionality was previously possible with external tools, by OpenAI fine-tuning their models to detect when a function should be called and outputting the correct format is a major time saver and drastically improves reliability.

In my opinion, it's quite clear that natural language to API calls will become the norm for many apps, particularly data-heavy apps. To that end, we'll continue to explore this function calling capability with several more real-world applications.